The RGB values of a pixel depend basically on three elements: the reflectance properties of the imaged object, the lighting conditions, and the camera sensor response. The color properties of an object are fully characterized by its reflectance spectra, i.e. the percentage of light reflected at each wavelength and angle. This spectrum cannot be retrieved from a triplet of color values of an image. Nevertheless, when using a calibrated device, three values are sufficient to characterize a color stimulus under a known illuminant.

Image capturing systems, such as scanners and digital cameras, do not have the ability to adapt to an illumination source. Scanners usually have fluorescent light sources. Illumination sources captured by digital cameras vary according to the scene, and often within the scene. Additionally, images captured with these devices are viewed using a wide variety of light sources. The white point of monitors can vary widely, where as hardcopy output is usually evaluated using standard daylight (illuminant D50) simulators. To faithfully reproduce the appearance of image colors, it follows that all image processing systems need to apply a transform that converts the input colors captured under the input illuminant to the corresponding output colors under the output illuminant.

Camera calibration and illuminant control are thus necessary to display correctly rendered colors. Consequently, the same scene captured with different uncalibrated consumer cameras will give rise to different pixel values. This is due to incomplete illuminant compensation and to the different characteristics of the cameras.

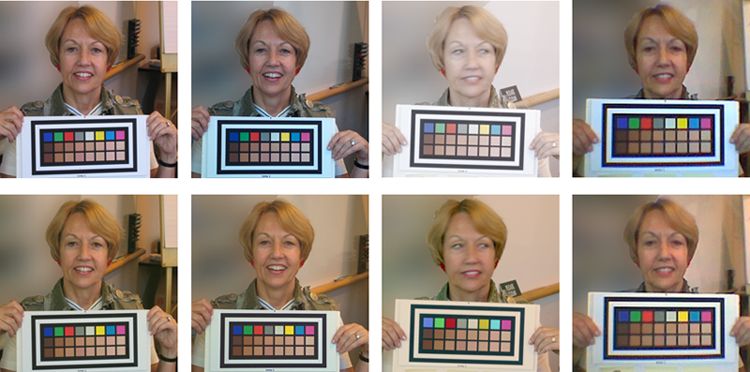

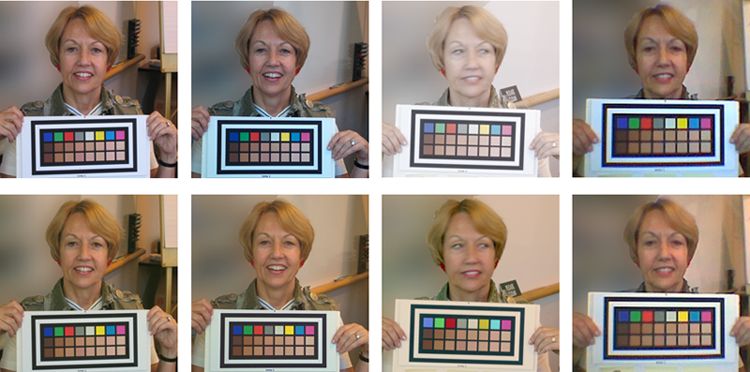

The method we developed performs a post-calibration to correct skin tones on images taken with a variety of consumer cameras under uncontrolled illuminant. A subject is imaged with a calibration target consisting of patches characteristic of human skin tone. The target is automatically extracted and the color values of each patch averaged. These values are then compared with reference values, allowing the computation of a color transform that is applied to the entire image. The face pixels are then extracted and the skin tone classified.

|

| Figure 1: Uncorrected (first row) and corrected images (second row) for cameras (from left to right) Canon S400, HP850, Nikon D1, and cellphone Nokia 6820 integrated camera |

HP Laboratories , Palo Alto, CA, USA

Sabine Süsstrunk